mirror of

https://github.com/qurator-spk/eynollah.git

synced 2026-01-29 13:46:58 +01:00

Merge pull request #23 from qurator-spk/cneud-spelling

Fix spelling issues

This commit is contained in:

commit

1ee219b657

9 changed files with 300 additions and 299 deletions

22

README.md

22

README.md

|

|

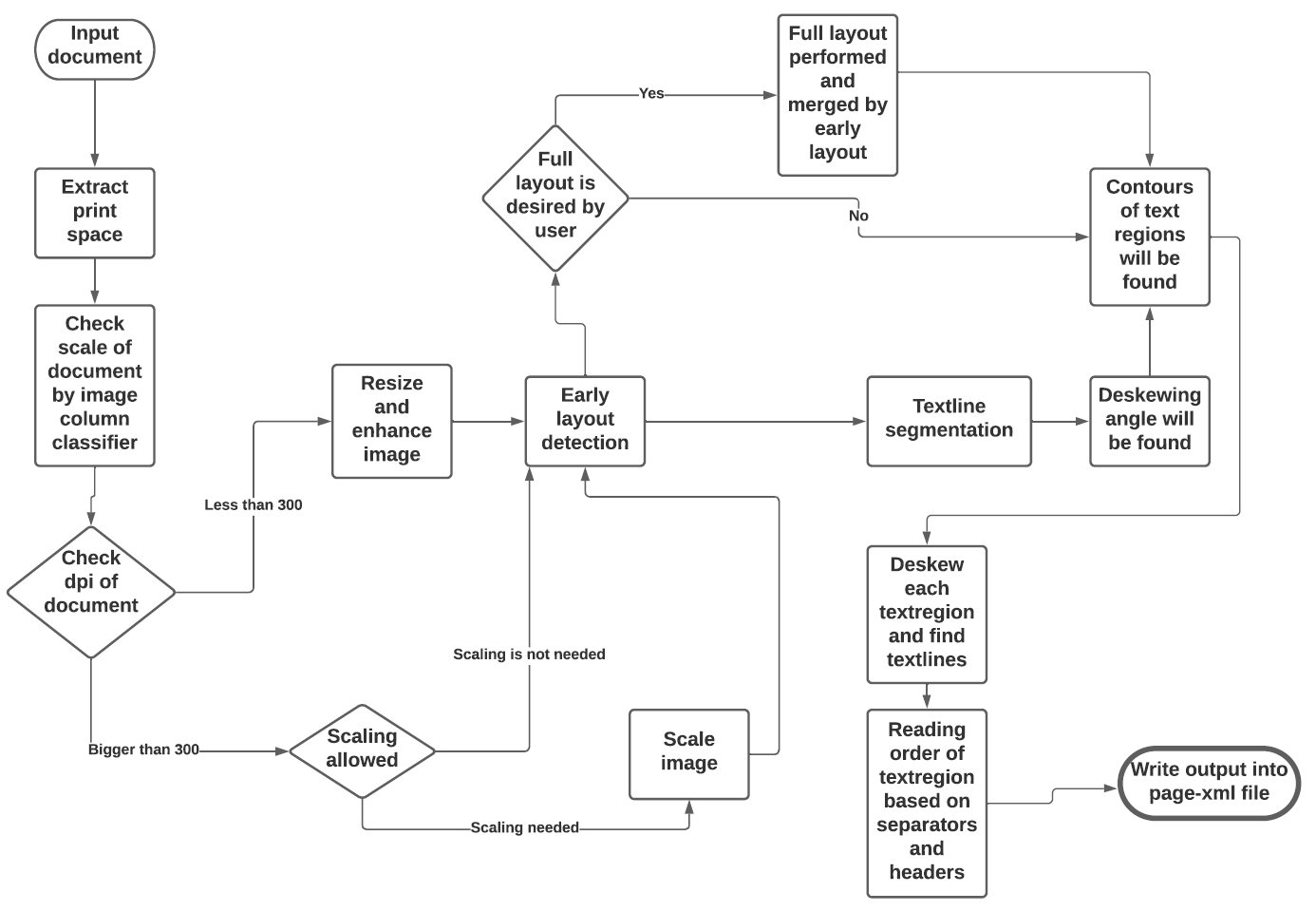

@ -25,30 +25,30 @@ The tool uses a combination of various models and heuristics (see flowchart belo

|

|||

* [Scale classification](https://github.com/qurator-spk/eynollah#Scale_classification)

|

||||

* [Heuristic methods](https://https://github.com/qurator-spk/eynollah#heuristic-methods)

|

||||

|

||||

The first three stages are based on [pixelwise segmentation](https://github.com/qurator-spk/sbb_pixelwise_segmentation).

|

||||

The first three stages are based on [pixel-wise segmentation](https://github.com/qurator-spk/sbb_pixelwise_segmentation).

|

||||

|

||||

|

||||

|

||||

## Border detection

|

||||

For the purpose of text recognition (OCR) and in order to avoid noise being introduced from texts outside the printspace, one first needs to detect the border of the printed frame. This is done by a binary pixelwise-segmentation model trained on a dataset of 2,000 documents where about 1,200 of them come from the [dhSegment](https://github.com/dhlab-epfl/dhSegment/) project (you can download the dataset from [here](https://github.com/dhlab-epfl/dhSegment/releases/download/v0.2/pages.zip)) and the remainder having been annotated in SBB. For border detection, the model needs to be fed with the whole image at once rather than separated in patches.

|

||||

For the purpose of text recognition (OCR) and in order to avoid noise being introduced from texts outside the printspace, one first needs to detect the border of the printed frame. This is done by a binary pixel-wise-segmentation model trained on a dataset of 2,000 documents where about 1,200 of them come from the [dhSegment](https://github.com/dhlab-epfl/dhSegment/) project (you can download the dataset from [here](https://github.com/dhlab-epfl/dhSegment/releases/download/v0.2/pages.zip)) and the remainder having been annotated in SBB. For border detection, the model needs to be fed with the whole image at once rather than separated in patches.

|

||||

|

||||

## Layout detection

|

||||

As a next step, text regions need to be identified by means of layout detection. Again a pixelwise segmentation model was trained on 131 labeled images from the SBB digital collections, including some data augmentation. Since the target of this tool are historical documents, we consider as main region types text regions, separators, images, tables and background - each with their own subclasses, e.g. in the case of text regions, subclasses like header/heading, drop capital, main body text etc. While it would be desirable to detect and classify each of these classes in a granular way, there are also limitations due to having a suitably large and balanced training set. Accordingly, the current version of this tool is focussed on the main region types background, text region, image and separator.

|

||||

As a next step, text regions need to be identified by means of layout detection. Again a pixel-wise segmentation model was trained on 131 labeled images from the SBB digital collections, including some data augmentation. Since the target of this tool are historical documents, we consider as main region types text regions, separators, images, tables and background - each with their own subclasses, e.g. in the case of text regions, subclasses like header/heading, drop capital, main body text etc. While it would be desirable to detect and classify each of these classes in a granular way, there are also limitations due to having a suitably large and balanced training set. Accordingly, the current version of this tool is focussed on the main region types background, text region, image and separator.

|

||||

|

||||

## Textline detection

|

||||

In a subsequent step, binary pixelwise segmentation is used again to classify pixels in a document that constitute textlines. For textline segmentation, a model was initially trained on documents with only one column/block of text and some augmentation with regards to scaling. By fine-tuning the parameters also for multi-column documents, additional training data was produced that resulted in a much more robust textline detection model.

|

||||

In a subsequent step, binary pixel-wise segmentation is used again to classify pixels in a document that constitute textlines. For textline segmentation, a model was initially trained on documents with only one column/block of text and some augmentation with regard to scaling. By fine-tuning the parameters also for multi-column documents, additional training data was produced that resulted in a much more robust textline detection model.

|

||||

|

||||

## Image enhancement

|

||||

This is an image to image model which input was low quality of an image and label was actually the original image. For this one we did not have any GT so we decreased the quality of documents in SBB and then feed them into model.

|

||||

This is an image to image model which input was low quality of an image and label was actually the original image. For this one we did not have any GT, so we decreased the quality of documents in SBB and then feed them into model.

|

||||

|

||||

## Scale classification

|

||||

This is simply an image classifier which classifies images based on their scales or better to say based on their number of columns.

|

||||

|

||||

## Heuristic methods

|

||||

Some heuristic methods are also employed to further improve the model predictions:

|

||||

* After border detection, the largest contour is determined by a bounding box and the image cropped to these coordinates.

|

||||

* After border detection, the largest contour is determined by a bounding box, and the image cropped to these coordinates.

|

||||

* For text region detection, the image is scaled up to make it easier for the model to detect background space between text regions.

|

||||

* A minimum area is defined for text regions in relation to the overall image dimensions, so that very small regions that are actually noise can be filtered out.

|

||||

* A minimum area is defined for text regions in relation to the overall image dimensions, so that very small regions that are noise can be filtered out.

|

||||

* Deskewing is applied on the text region level (due to regions having different degrees of skew) in order to improve the textline segmentation result.

|

||||

* After deskewing, a calculation of the pixel distribution on the X-axis allows the separation of textlines (foreground) and background pixels.

|

||||

* Finally, using the derived coordinates, bounding boxes are determined for each textline.

|

||||

|

|

@ -105,18 +105,18 @@ Here are the difference in elements detected depending on the `--full-layout`/`-

|

|||

|

||||

### How to use

|

||||

|

||||

First of all, this model makes use of up to 9 trained models which are responsible for different operations like size detection, column classification, image enhancement, page extraction, main layout detection, full layout detection and textline detection. But this does not mean that all 9 models are always required for every document. Based on the document characteristics and parameters specified, different scenarios can be applied.

|

||||

First, this model makes use of up to 9 trained models which are responsible for different operations like size detection, column classification, image enhancement, page extraction, main layout detection, full layout detection and textline detection.That does not mean that all 9 models are always required for every document. Based on the document characteristics and parameters specified, different scenarios can be applied.

|

||||

|

||||

* If none of the parameters is set to `true`, the tool will perform a layout detection of main regions (background, text, images, separators and marginals). An advantage of this tool is that it tries to extract main text regions separately as much as possible.

|

||||

|

||||

* If you set `-ae` (**a**llow image **e**nhancement) parameter to `true`, the tool will first check the ppi (pixel-per-inch) of the image and when it is less than 300, the tool will resize it and only then image enhancement will occur. Image enhancement can also take place without this option, but by setting this option to `true`, the layout xml data (e.g. coordinates) will be based on the resized and enhanced image instead of the original image.

|

||||

|

||||

* For some documents, while the quality is good, their scale is extremly large and the performance of tool decreases. In such cases you can set `-as` (**a**llow **s**caling) to `true`. With this option enabled, the tool will try to rescale the image and only then the layout detection process will begin.

|

||||

* For some documents, while the quality is good, their scale is very large, and the performance of tool decreases. In such cases you can set `-as` (**a**llow **s**caling) to `true`. With this option enabled, the tool will try to rescale the image and only then the layout detection process will begin.

|

||||

|

||||

* If you care about drop capitals (initials) and headings, you can set `-fl` (**f**ull **l**ayout) to `true`. With this setting, the tool can currently distinguish 7 document layout classes/elements.

|

||||

|

||||

* In cases where the document includes curved headers or curved lines, rectangular bounding boxes for textlines will not be a great option. In such cases it is strongly recommended to set the flag `-cl` (**c**urved **l**ines) to `true` to find countours of curved lines instead of rectangular boundinx boxes. Be advised that enabling this option increases the processing time of the tool.

|

||||

* In cases where the document includes curved headers or curved lines, rectangular bounding boxes for textlines will not be a great option. In such cases it is strongly recommended setting the flag `-cl` (**c**urved **l**ines) to `true` to find contours of curved lines instead of rectangular bounding boxes. Be advised that enabling this option increases the processing time of the tool.

|

||||

|

||||

* To crop and save image regions inside the document, set the parameter `-si` (**s**ave **i**mages) to true and provide a directory path to store the extracted images.

|

||||

|

||||

* This tool is actively being developed. If problems occur or the performance does not meet your expectations, we welcome your feedback via [issues](https://github.com/qurator-spk/eynollah/issues).

|

||||

* This tool is actively being developed. If problems occur, or the performance does not meet your expectations, we welcome your feedback via [issues](https://github.com/qurator-spk/eynollah/issues).

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ warnings.filterwarnings("ignore")

|

|||

from .utils.contour import (

|

||||

filter_contours_area_of_image,

|

||||

find_contours_mean_y_diff,

|

||||

find_new_features_of_contoures,

|

||||

find_new_features_of_contours,

|

||||

get_text_region_boxes_by_given_contours,

|

||||

get_textregion_contours_in_org_image,

|

||||

return_contours_of_image,

|

||||

|

|

@ -47,10 +47,10 @@ from .utils.rotate import (

|

|||

rotation_not_90_func_full_layout)

|

||||

from .utils.separate_lines import (

|

||||

textline_contours_postprocessing,

|

||||

seperate_lines_new2,

|

||||

separate_lines_new2,

|

||||

return_deskew_slop)

|

||||

from .utils.drop_capitals import (

|

||||

adhere_drop_capital_region_into_cprresponding_textline,

|

||||

adhere_drop_capital_region_into_corresponding_textline,

|

||||

filter_small_drop_capitals_from_no_patch_layout)

|

||||

from .utils.marginals import get_marginals

|

||||

from .utils.resize import resize_image

|

||||

|

|

@ -119,7 +119,7 @@ class Eynollah:

|

|||

self.logger = getLogger('eynollah')

|

||||

self.dir_models = dir_models

|

||||

|

||||

self.model_dir_of_enhancemnet = dir_models + "/model_enhancement.h5"

|

||||

self.model_dir_of_enhancement = dir_models + "/model_enhancement.h5"

|

||||

self.model_dir_of_col_classifier = dir_models + "/model_scale_classifier.h5"

|

||||

self.model_region_dir_p = dir_models + "/model_main_covid19_lr5-5_scale_1_1_great.h5"

|

||||

self.model_region_dir_p2 = dir_models + "/model_main_home_corona3_rot.h5"

|

||||

|

|

@ -149,7 +149,7 @@ class Eynollah:

|

|||

|

||||

def predict_enhancement(self, img):

|

||||

self.logger.debug("enter predict_enhancement")

|

||||

model_enhancement, _ = self.start_new_session_and_model(self.model_dir_of_enhancemnet)

|

||||

model_enhancement, _ = self.start_new_session_and_model(self.model_dir_of_enhancement)

|

||||

|

||||

img_height_model = model_enhancement.layers[len(model_enhancement.layers) - 1].output_shape[1]

|

||||

img_width_model = model_enhancement.layers[len(model_enhancement.layers) - 1].output_shape[2]

|

||||

|

|

@ -816,7 +816,7 @@ class Eynollah:

|

|||

all_box_coord_per_process = []

|

||||

index_by_text_region_contours = []

|

||||

|

||||

textline_cnt_seperated = np.zeros(textline_mask_tot_ea.shape)

|

||||

textline_cnt_separated = np.zeros(textline_mask_tot_ea.shape)

|

||||

|

||||

for mv in range(len(boxes_text)):

|

||||

|

||||

|

|

@ -833,8 +833,8 @@ class Eynollah:

|

|||

slope_for_all = [slope_deskew][0]

|

||||

else:

|

||||

try:

|

||||

textline_con, hierachy = return_contours_of_image(img_int_p)

|

||||

textline_con_fil = filter_contours_area_of_image(img_int_p, textline_con, hierachy, max_area=1, min_area=0.0008)

|

||||

textline_con, hierarchy = return_contours_of_image(img_int_p)

|

||||

textline_con_fil = filter_contours_area_of_image(img_int_p, textline_con, hierarchy, max_area=1, min_area=0.0008)

|

||||

y_diff_mean = find_contours_mean_y_diff(textline_con_fil)

|

||||

sigma_des = max(1, int(y_diff_mean * (4.0 / 40.0)))

|

||||

|

||||

|

|

@ -868,19 +868,19 @@ class Eynollah:

|

|||

textline_biggest_region = mask_biggest * textline_mask_tot_ea

|

||||

|

||||

# print(slope_for_all,'slope_for_all')

|

||||

textline_rotated_seperated = seperate_lines_new2(textline_biggest_region[y : y + h, x : x + w], 0, num_col, slope_for_all, plotter=self.plotter)

|

||||

textline_rotated_separated = separate_lines_new2(textline_biggest_region[y : y + h, x : x + w], 0, num_col, slope_for_all, plotter=self.plotter)

|

||||

|

||||

# new line added

|

||||

##print(np.shape(textline_rotated_seperated),np.shape(mask_biggest))

|

||||

textline_rotated_seperated[mask_region_in_patch_region[:, :] != 1] = 0

|

||||

##print(np.shape(textline_rotated_separated),np.shape(mask_biggest))

|

||||

textline_rotated_separated[mask_region_in_patch_region[:, :] != 1] = 0

|

||||

# till here

|

||||

|

||||

textline_cnt_seperated[y : y + h, x : x + w] = textline_rotated_seperated

|

||||

textline_region_in_image[y : y + h, x : x + w] = textline_rotated_seperated

|

||||

textline_cnt_separated[y : y + h, x : x + w] = textline_rotated_separated

|

||||

textline_region_in_image[y : y + h, x : x + w] = textline_rotated_separated

|

||||

|

||||

# plt.imshow(textline_region_in_image)

|

||||

# plt.show()

|

||||

# plt.imshow(textline_cnt_seperated)

|

||||

# plt.imshow(textline_cnt_separated)

|

||||

# plt.show()

|

||||

|

||||

pixel_img = 1

|

||||

|

|

@ -944,8 +944,8 @@ class Eynollah:

|

|||

bounding_box_of_textregion_per_each_subprocess.append(boxes_text[mv])

|

||||

else:

|

||||

try:

|

||||

textline_con, hierachy = return_contours_of_image(img_int_p)

|

||||

textline_con_fil = filter_contours_area_of_image(img_int_p, textline_con, hierachy, max_area=1, min_area=0.00008)

|

||||

textline_con, hierarchy = return_contours_of_image(img_int_p)

|

||||

textline_con_fil = filter_contours_area_of_image(img_int_p, textline_con, hierarchy, max_area=1, min_area=0.00008)

|

||||

y_diff_mean = find_contours_mean_y_diff(textline_con_fil)

|

||||

sigma_des = int(y_diff_mean * (4.0 / 40.0))

|

||||

if sigma_des < 1:

|

||||

|

|

@ -1018,8 +1018,8 @@ class Eynollah:

|

|||

crop_img = crop_img[:, :, 0]

|

||||

crop_img = cv2.erode(crop_img, KERNEL, iterations=2)

|

||||

try:

|

||||

textline_con, hierachy = return_contours_of_image(crop_img)

|

||||

textline_con_fil = filter_contours_area_of_image(crop_img, textline_con, hierachy, max_area=1, min_area=0.0008)

|

||||

textline_con, hierarchy = return_contours_of_image(crop_img)

|

||||

textline_con_fil = filter_contours_area_of_image(crop_img, textline_con, hierarchy, max_area=1, min_area=0.0008)

|

||||

y_diff_mean = find_contours_mean_y_diff(textline_con_fil)

|

||||

sigma_des = max(1, int(y_diff_mean * (4.0 / 40.0)))

|

||||

crop_img[crop_img > 0] = 1

|

||||

|

|

@ -1124,8 +1124,8 @@ class Eynollah:

|

|||

|

||||

def do_order_of_regions_full_layout(self, contours_only_text_parent, contours_only_text_parent_h, boxes, textline_mask_tot):

|

||||

self.logger.debug("enter do_order_of_regions_full_layout")

|

||||

cx_text_only, cy_text_only, x_min_text_only, _, _, _, y_cor_x_min_main = find_new_features_of_contoures(contours_only_text_parent)

|

||||

cx_text_only_h, cy_text_only_h, x_min_text_only_h, _, _, _, y_cor_x_min_main_h = find_new_features_of_contoures(contours_only_text_parent_h)

|

||||

cx_text_only, cy_text_only, x_min_text_only, _, _, _, y_cor_x_min_main = find_new_features_of_contours(contours_only_text_parent)

|

||||

cx_text_only_h, cy_text_only_h, x_min_text_only_h, _, _, _, y_cor_x_min_main_h = find_new_features_of_contours(contours_only_text_parent_h)

|

||||

|

||||

try:

|

||||

arg_text_con = []

|

||||

|

|

@ -1274,7 +1274,7 @@ class Eynollah:

|

|||

|

||||

def do_order_of_regions_no_full_layout(self, contours_only_text_parent, contours_only_text_parent_h, boxes, textline_mask_tot):

|

||||

self.logger.debug("enter do_order_of_regions_no_full_layout")

|

||||

cx_text_only, cy_text_only, x_min_text_only, _, _, _, y_cor_x_min_main = find_new_features_of_contoures(contours_only_text_parent)

|

||||

cx_text_only, cy_text_only, x_min_text_only, _, _, _, y_cor_x_min_main = find_new_features_of_contours(contours_only_text_parent)

|

||||

|

||||

try:

|

||||

arg_text_con = []

|

||||

|

|

@ -1461,9 +1461,9 @@ class Eynollah:

|

|||

|

||||

if num_col_classifier in (1, 2):

|

||||

try:

|

||||

regions_without_seperators = (text_regions_p[:, :] == 1) * 1

|

||||

regions_without_seperators = regions_without_seperators.astype(np.uint8)

|

||||

text_regions_p = get_marginals(rotate_image(regions_without_seperators, slope_deskew), text_regions_p, num_col_classifier, slope_deskew, kernel=KERNEL)

|

||||

regions_without_separators = (text_regions_p[:, :] == 1) * 1

|

||||

regions_without_separators = regions_without_separators.astype(np.uint8)

|

||||

text_regions_p = get_marginals(rotate_image(regions_without_separators, slope_deskew), text_regions_p, num_col_classifier, slope_deskew, kernel=KERNEL)

|

||||

except Exception as e:

|

||||

self.logger.error("exception %s", e)

|

||||

|

||||

|

|

@ -1478,36 +1478,36 @@ class Eynollah:

|

|||

_, textline_mask_tot_d, text_regions_p_1_n = rotation_not_90_func(image_page, textline_mask_tot, text_regions_p, slope_deskew)

|

||||

text_regions_p_1_n = resize_image(text_regions_p_1_n, text_regions_p.shape[0], text_regions_p.shape[1])

|

||||

textline_mask_tot_d = resize_image(textline_mask_tot_d, text_regions_p.shape[0], text_regions_p.shape[1])

|

||||

regions_without_seperators_d = (text_regions_p_1_n[:, :] == 1) * 1

|

||||

regions_without_seperators = (text_regions_p[:, :] == 1) * 1 # ( (text_regions_p[:,:]==1) | (text_regions_p[:,:]==2) )*1 #self.return_regions_without_seperators_new(text_regions_p[:,:,0],img_only_regions)

|

||||

regions_without_separators_d = (text_regions_p_1_n[:, :] == 1) * 1

|

||||

regions_without_separators = (text_regions_p[:, :] == 1) * 1 # ( (text_regions_p[:,:]==1) | (text_regions_p[:,:]==2) )*1 #self.return_regions_without_separators_new(text_regions_p[:,:,0],img_only_regions)

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

text_regions_p_1_n = None

|

||||

textline_mask_tot_d = None

|

||||

regions_without_seperators_d = None

|

||||

regions_without_separators_d = None

|

||||

pixel_lines = 3

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

_, _, matrix_of_lines_ch, spliter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

_, _, matrix_of_lines_ch, splitter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

|

||||

if np.abs(slope_deskew) >= SLOPE_THRESHOLD:

|

||||

_, _, matrix_of_lines_ch_d, spliter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

_, _, matrix_of_lines_ch_d, splitter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

K.clear_session()

|

||||

|

||||

self.logger.info("num_col_classifier: %s", num_col_classifier)

|

||||

|

||||

if num_col_classifier >= 3:

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

regions_without_seperators = regions_without_seperators.astype(np.uint8)

|

||||

regions_without_seperators = cv2.erode(regions_without_seperators[:, :], KERNEL, iterations=6)

|

||||

regions_without_separators = regions_without_separators.astype(np.uint8)

|

||||

regions_without_separators = cv2.erode(regions_without_separators[:, :], KERNEL, iterations=6)

|

||||

else:

|

||||

regions_without_seperators_d = regions_without_seperators_d.astype(np.uint8)

|

||||

regions_without_seperators_d = cv2.erode(regions_without_seperators_d[:, :], KERNEL, iterations=6)

|

||||

regions_without_separators_d = regions_without_separators_d.astype(np.uint8)

|

||||

regions_without_separators_d = cv2.erode(regions_without_separators_d[:, :], KERNEL, iterations=6)

|

||||

t1 = time.time()

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

boxes = return_boxes_of_images_by_order_of_reading_new(spliter_y_new, regions_without_seperators, matrix_of_lines_ch, num_col_classifier)

|

||||

boxes = return_boxes_of_images_by_order_of_reading_new(splitter_y_new, regions_without_separators, matrix_of_lines_ch, num_col_classifier)

|

||||

boxes_d = None

|

||||

self.logger.debug("len(boxes): %s", len(boxes))

|

||||

else:

|

||||

boxes_d = return_boxes_of_images_by_order_of_reading_new(spliter_y_new_d, regions_without_seperators_d, matrix_of_lines_ch_d, num_col_classifier)

|

||||

boxes_d = return_boxes_of_images_by_order_of_reading_new(splitter_y_new_d, regions_without_separators_d, matrix_of_lines_ch_d, num_col_classifier)

|

||||

boxes = None

|

||||

self.logger.debug("len(boxes): %s", len(boxes_d))

|

||||

|

||||

|

|

@ -1519,7 +1519,7 @@ class Eynollah:

|

|||

# plt.show()

|

||||

K.clear_session()

|

||||

self.logger.debug('exit run_boxes_no_full_layout')

|

||||

return polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_seperators_d, boxes, boxes_d

|

||||

return polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_separators_d, boxes, boxes_d

|

||||

|

||||

def run_boxes_full_layout(self, image_page, textline_mask_tot, text_regions_p, slope_deskew, num_col_classifier, img_only_regions):

|

||||

self.logger.debug('enter run_boxes_full_layout')

|

||||

|

|

@ -1570,19 +1570,19 @@ class Eynollah:

|

|||

text_regions_p_1_n = resize_image(text_regions_p_1_n, text_regions_p.shape[0], text_regions_p.shape[1])

|

||||

textline_mask_tot_d = resize_image(textline_mask_tot_d, text_regions_p.shape[0], text_regions_p.shape[1])

|

||||

regions_fully_n = resize_image(regions_fully_n, text_regions_p.shape[0], text_regions_p.shape[1])

|

||||

regions_without_seperators_d = (text_regions_p_1_n[:, :] == 1) * 1

|

||||

regions_without_separators_d = (text_regions_p_1_n[:, :] == 1) * 1

|

||||

else:

|

||||

text_regions_p_1_n = None

|

||||

textline_mask_tot_d = None

|

||||

regions_without_seperators_d = None

|

||||

regions_without_separators_d = None

|

||||

|

||||

regions_without_seperators = (text_regions_p[:, :] == 1) * 1 # ( (text_regions_p[:,:]==1) | (text_regions_p[:,:]==2) )*1 #self.return_regions_without_seperators_new(text_regions_p[:,:,0],img_only_regions)

|

||||

regions_without_separators = (text_regions_p[:, :] == 1) * 1 # ( (text_regions_p[:,:]==1) | (text_regions_p[:,:]==2) )*1 #self.return_regions_without_separators_new(text_regions_p[:,:,0],img_only_regions)

|

||||

|

||||

K.clear_session()

|

||||

img_revised_tab = np.copy(text_regions_p[:, :])

|

||||

polygons_of_images = return_contours_of_interested_region(img_revised_tab, 5)

|

||||

self.logger.debug('exit run_boxes_full_layout')

|

||||

return polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_seperators_d, regions_fully, regions_without_seperators

|

||||

return polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_separators_d, regions_fully, regions_without_separators

|

||||

|

||||

def run(self):

|

||||

"""

|

||||

|

|

@ -1627,14 +1627,14 @@ class Eynollah:

|

|||

t1 = time.time()

|

||||

|

||||

if not self.full_layout:

|

||||

polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_seperators_d, boxes, boxes_d = self.run_boxes_no_full_layout(image_page, textline_mask_tot, text_regions_p, slope_deskew, num_col_classifier)

|

||||

polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_separators_d, boxes, boxes_d = self.run_boxes_no_full_layout(image_page, textline_mask_tot, text_regions_p, slope_deskew, num_col_classifier)

|

||||

|

||||

pixel_img = 4

|

||||

min_area_mar = 0.00001

|

||||

polygons_of_marginals = return_contours_of_interested_region(text_regions_p, pixel_img, min_area_mar)

|

||||

|

||||

if self.full_layout:

|

||||

polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_seperators_d, regions_fully, regions_without_seperators = self.run_boxes_full_layout(image_page, textline_mask_tot, text_regions_p, slope_deskew, num_col_classifier, img_only_regions)

|

||||

polygons_of_images, img_revised_tab, text_regions_p_1_n, textline_mask_tot_d, regions_without_separators_d, regions_fully, regions_without_separators = self.run_boxes_full_layout(image_page, textline_mask_tot, text_regions_p, slope_deskew, num_col_classifier, img_only_regions)

|

||||

|

||||

text_only = ((img_revised_tab[:, :] == 1)) * 1

|

||||

if np.abs(slope_deskew) >= SLOPE_THRESHOLD:

|

||||

|

|

@ -1655,8 +1655,8 @@ class Eynollah:

|

|||

contours_only_text_parent = list(np.array(contours_only_text_parent)[index_con_parents])

|

||||

areas_cnt_text_parent = list(np.array(areas_cnt_text_parent)[index_con_parents])

|

||||

|

||||

cx_bigest_big, cy_biggest_big, _, _, _, _, _ = find_new_features_of_contoures([contours_biggest])

|

||||

cx_bigest, cy_biggest, _, _, _, _, _ = find_new_features_of_contoures(contours_only_text_parent)

|

||||

cx_bigest_big, cy_biggest_big, _, _, _, _, _ = find_new_features_of_contours([contours_biggest])

|

||||

cx_bigest, cy_biggest, _, _, _, _, _ = find_new_features_of_contours(contours_only_text_parent)

|

||||

|

||||

contours_only_text_d, hir_on_text_d = return_contours_of_image(text_only_d)

|

||||

contours_only_text_parent_d = return_parent_contours(contours_only_text_d, hir_on_text_d)

|

||||

|

|

@ -1669,8 +1669,8 @@ class Eynollah:

|

|||

contours_only_text_parent_d=list(np.array(contours_only_text_parent_d)[index_con_parents_d] )

|

||||

areas_cnt_text_d=list(np.array(areas_cnt_text_d)[index_con_parents_d] )

|

||||

|

||||

cx_bigest_d_big, cy_biggest_d_big, _, _, _, _, _ = find_new_features_of_contoures([contours_biggest_d])

|

||||

cx_bigest_d, cy_biggest_d, _, _, _, _, _ = find_new_features_of_contoures(contours_only_text_parent_d)

|

||||

cx_bigest_d_big, cy_biggest_d_big, _, _, _, _, _ = find_new_features_of_contours([contours_biggest_d])

|

||||

cx_bigest_d, cy_biggest_d, _, _, _, _, _ = find_new_features_of_contours(contours_only_text_parent_d)

|

||||

try:

|

||||

cx_bigest_d_last5 = cx_bigest_d[-5:]

|

||||

cy_biggest_d_last5 = cy_biggest_d[-5:]

|

||||

|

|

@ -1715,8 +1715,8 @@ class Eynollah:

|

|||

contours_only_text_parent = list(np.array(contours_only_text_parent)[index_con_parents])

|

||||

areas_cnt_text_parent = list(np.array(areas_cnt_text_parent)[index_con_parents])

|

||||

|

||||

cx_bigest_big, cy_biggest_big, _, _, _, _, _ = find_new_features_of_contoures([contours_biggest])

|

||||

cx_bigest, cy_biggest, _, _, _, _, _ = find_new_features_of_contoures(contours_only_text_parent)

|

||||

cx_bigest_big, cy_biggest_big, _, _, _, _, _ = find_new_features_of_contours([contours_biggest])

|

||||

cx_bigest, cy_biggest, _, _, _, _, _ = find_new_features_of_contours(contours_only_text_parent)

|

||||

self.logger.debug('areas_cnt_text_parent %s', areas_cnt_text_parent)

|

||||

# self.logger.debug('areas_cnt_text_parent_d %s', areas_cnt_text_parent_d)

|

||||

# self.logger.debug('len(contours_only_text_parent) %s', len(contours_only_text_parent_d))

|

||||

|

|

@ -1753,46 +1753,46 @@ class Eynollah:

|

|||

polygons_of_tabels = []

|

||||

pixel_img = 4

|

||||

polygons_of_drop_capitals = return_contours_of_interested_region_by_min_size(text_regions_p, pixel_img)

|

||||

all_found_texline_polygons = adhere_drop_capital_region_into_cprresponding_textline(text_regions_p, polygons_of_drop_capitals, contours_only_text_parent, contours_only_text_parent_h, all_box_coord, all_box_coord_h, all_found_texline_polygons, all_found_texline_polygons_h, kernel=KERNEL, curved_line=self.curved_line)

|

||||

all_found_texline_polygons = adhere_drop_capital_region_into_corresponding_textline(text_regions_p, polygons_of_drop_capitals, contours_only_text_parent, contours_only_text_parent_h, all_box_coord, all_box_coord_h, all_found_texline_polygons, all_found_texline_polygons_h, kernel=KERNEL, curved_line=self.curved_line)

|

||||

|

||||

# print(len(contours_only_text_parent_h),len(contours_only_text_parent_h_d_ordered),'contours_only_text_parent_h')

|

||||

pixel_lines = 6

|

||||

|

||||

if not self.headers_off:

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

num_col, _, matrix_of_lines_ch, spliter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines, contours_only_text_parent_h)

|

||||

num_col, _, matrix_of_lines_ch, splitter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines, contours_only_text_parent_h)

|

||||

else:

|

||||

_, _, matrix_of_lines_ch_d, spliter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines, contours_only_text_parent_h_d_ordered)

|

||||

_, _, matrix_of_lines_ch_d, splitter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines, contours_only_text_parent_h_d_ordered)

|

||||

elif self.headers_off:

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

num_col, _, matrix_of_lines_ch, spliter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

num_col, _, matrix_of_lines_ch, splitter_y_new, _ = find_number_of_columns_in_document(np.repeat(text_regions_p[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

else:

|

||||

_, _, matrix_of_lines_ch_d, spliter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

_, _, matrix_of_lines_ch_d, splitter_y_new_d, _ = find_number_of_columns_in_document(np.repeat(text_regions_p_1_n[:, :, np.newaxis], 3, axis=2), num_col_classifier, pixel_lines)

|

||||

|

||||

# print(peaks_neg_fin,peaks_neg_fin_d,'num_col2')

|

||||

# print(spliter_y_new,spliter_y_new_d,'num_col_classifier')

|

||||

# print(splitter_y_new,splitter_y_new_d,'num_col_classifier')

|

||||

# print(matrix_of_lines_ch.shape,matrix_of_lines_ch_d.shape,'matrix_of_lines_ch')

|

||||

|

||||

if num_col_classifier >= 3:

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

regions_without_seperators = regions_without_seperators.astype(np.uint8)

|

||||

regions_without_seperators = cv2.erode(regions_without_seperators[:, :], KERNEL, iterations=6)

|

||||

random_pixels_for_image = np.random.randn(regions_without_seperators.shape[0], regions_without_seperators.shape[1])

|

||||

regions_without_separators = regions_without_separators.astype(np.uint8)

|

||||

regions_without_separators = cv2.erode(regions_without_separators[:, :], KERNEL, iterations=6)

|

||||

random_pixels_for_image = np.random.randn(regions_without_separators.shape[0], regions_without_separators.shape[1])

|

||||

random_pixels_for_image[random_pixels_for_image < -0.5] = 0

|

||||

random_pixels_for_image[random_pixels_for_image != 0] = 1

|

||||

regions_without_seperators[(random_pixels_for_image[:, :] == 1) & (text_regions_p[:, :] == 5)] = 1

|

||||

regions_without_separators[(random_pixels_for_image[:, :] == 1) & (text_regions_p[:, :] == 5)] = 1

|

||||

else:

|

||||

regions_without_seperators_d = regions_without_seperators_d.astype(np.uint8)

|

||||

regions_without_seperators_d = cv2.erode(regions_without_seperators_d[:, :], KERNEL, iterations=6)

|

||||

random_pixels_for_image = np.random.randn(regions_without_seperators_d.shape[0], regions_without_seperators_d.shape[1])

|

||||

regions_without_separators_d = regions_without_separators_d.astype(np.uint8)

|

||||

regions_without_separators_d = cv2.erode(regions_without_separators_d[:, :], KERNEL, iterations=6)

|

||||

random_pixels_for_image = np.random.randn(regions_without_separators_d.shape[0], regions_without_separators_d.shape[1])

|

||||

random_pixels_for_image[random_pixels_for_image < -0.5] = 0

|

||||

random_pixels_for_image[random_pixels_for_image != 0] = 1

|

||||

regions_without_seperators_d[(random_pixels_for_image[:, :] == 1) & (text_regions_p_1_n[:, :] == 5)] = 1

|

||||

regions_without_separators_d[(random_pixels_for_image[:, :] == 1) & (text_regions_p_1_n[:, :] == 5)] = 1

|

||||

|

||||

if np.abs(slope_deskew) < SLOPE_THRESHOLD:

|

||||

boxes = return_boxes_of_images_by_order_of_reading_new(spliter_y_new, regions_without_seperators, matrix_of_lines_ch, num_col_classifier)

|

||||

boxes = return_boxes_of_images_by_order_of_reading_new(splitter_y_new, regions_without_separators, matrix_of_lines_ch, num_col_classifier)

|

||||

else:

|

||||

boxes_d = return_boxes_of_images_by_order_of_reading_new(spliter_y_new_d, regions_without_seperators_d, matrix_of_lines_ch_d, num_col_classifier)

|

||||

boxes_d = return_boxes_of_images_by_order_of_reading_new(splitter_y_new_d, regions_without_separators_d, matrix_of_lines_ch_d, num_col_classifier)

|

||||

|

||||

if self.plotter:

|

||||

self.plotter.write_images_into_directory(polygons_of_images, image_page)

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@ import cv2

|

|||

from scipy.ndimage import gaussian_filter1d

|

||||

|

||||

from .utils import crop_image_inside_box

|

||||

from .utils.rotate import rotyate_image_different

|

||||

from .utils.rotate import rotate_image_different

|

||||

from .utils.resize import resize_image

|

||||

|

||||

class EynollahPlotter():

|

||||

|

|

@ -121,7 +121,7 @@ class EynollahPlotter():

|

|||

if self.dir_of_all is not None:

|

||||

cv2.imwrite(os.path.join(self.dir_of_all, self.image_filename_stem + "_org.png"), self.image_org)

|

||||

if self.dir_of_deskewed is not None:

|

||||

img_rotated = rotyate_image_different(self.image_org, slope_deskew)

|

||||

img_rotated = rotate_image_different(self.image_org, slope_deskew)

|

||||

cv2.imwrite(os.path.join(self.dir_of_deskewed, self.image_filename_stem + "_deskewed.png"), img_rotated)

|

||||

|

||||

def save_page_image(self, image_page):

|

||||

|

|

@ -153,10 +153,10 @@ class EynollahPlotter():

|

|||

plt.legend(loc='best')

|

||||

plt.savefig(os.path.join(self.dir_of_all, self.image_filename_stem+'_rotation_angle.png'))

|

||||

|

||||

def write_images_into_directory(self, img_contoures, image_page):

|

||||

def write_images_into_directory(self, img_contours, image_page):

|

||||

if self.dir_of_cropped_images is not None:

|

||||

index = 0

|

||||

for cont_ind in img_contoures:

|

||||

for cont_ind in img_contours:

|

||||

x, y, w, h = cv2.boundingRect(cont_ind)

|

||||

box = [x, y, w, h]

|

||||

croped_page, page_coord = crop_image_inside_box(box, image_page)

|

||||

|

|

|

|||

|

|

@ -10,7 +10,7 @@ from scipy.ndimage import gaussian_filter1d

|

|||

|

||||

from .is_nan import isNaN

|

||||

from .contour import (contours_in_same_horizon,

|

||||

find_new_features_of_contoures,

|

||||

find_new_features_of_contours,

|

||||

return_contours_of_image,

|

||||

return_parent_contours)

|

||||

|

||||

|

|

@ -348,27 +348,28 @@ def boosting_headers_by_longshot_region_segmentation(textregion_pre_p, textregio

|

|||

# headers_in_longshot= ( (textregion_pre_np[:,:,0]==2) | (textregion_pre_np[:,:,0]==1) )*1

|

||||

textregion_pre_p[:, :, 0][(headers_in_longshot[:, :] == 1) & (textregion_pre_p[:, :, 0] != 4)] = 2

|

||||

textregion_pre_p[:, :, 0][textregion_pre_p[:, :, 0] == 1] = 0

|

||||

# textregion_pre_p[:,:,0][( img_only_text[:,:]==1) & (textregion_pre_p[:,:,0]!=7) & (textregion_pre_p[:,:,0]!=2)]=1 # eralier it was so, but by this manner the drop capitals are alse deleted

|

||||

# earlier it was so, but by this manner the drop capitals are also deleted

|

||||

# textregion_pre_p[:,:,0][( img_only_text[:,:]==1) & (textregion_pre_p[:,:,0]!=7) & (textregion_pre_p[:,:,0]!=2)]=1

|

||||

textregion_pre_p[:, :, 0][(img_only_text[:, :] == 1) & (textregion_pre_p[:, :, 0] != 7) & (textregion_pre_p[:, :, 0] != 4) & (textregion_pre_p[:, :, 0] != 2)] = 1

|

||||

return textregion_pre_p

|

||||

|

||||

|

||||

def find_num_col_deskew(regions_without_seperators, sigma_, multiplier=3.8):

|

||||

regions_without_seperators_0 = regions_without_seperators[:,:].sum(axis=1)

|

||||

z = gaussian_filter1d(regions_without_seperators_0, sigma_)

|

||||

def find_num_col_deskew(regions_without_separators, sigma_, multiplier=3.8):

|

||||

regions_without_separators_0 = regions_without_separators[:,:].sum(axis=1)

|

||||

z = gaussian_filter1d(regions_without_separators_0, sigma_)

|

||||

return np.std(z)

|

||||

|

||||

|

||||

def find_num_col(regions_without_seperators, multiplier=3.8):

|

||||

regions_without_seperators_0 = regions_without_seperators[:, :].sum(axis=0)

|

||||

##plt.plot(regions_without_seperators_0)

|

||||

def find_num_col(regions_without_separators, multiplier=3.8):

|

||||

regions_without_separators_0 = regions_without_separators[:, :].sum(axis=0)

|

||||

##plt.plot(regions_without_separators_0)

|

||||

##plt.show()

|

||||

sigma_ = 35 # 70#35

|

||||

meda_n_updown = regions_without_seperators_0[len(regions_without_seperators_0) :: -1]

|

||||

first_nonzero = next((i for i, x in enumerate(regions_without_seperators_0) if x), 0)

|

||||

meda_n_updown = regions_without_separators_0[len(regions_without_separators_0) :: -1]

|

||||

first_nonzero = next((i for i, x in enumerate(regions_without_separators_0) if x), 0)

|

||||

last_nonzero = next((i for i, x in enumerate(meda_n_updown) if x), 0)

|

||||

last_nonzero = len(regions_without_seperators_0) - last_nonzero

|

||||

y = regions_without_seperators_0 # [first_nonzero:last_nonzero]

|

||||

last_nonzero = len(regions_without_separators_0) - last_nonzero

|

||||

y = regions_without_separators_0 # [first_nonzero:last_nonzero]

|

||||

y_help = np.zeros(len(y) + 20)

|

||||

y_help[10 : len(y) + 10] = y

|

||||

x = np.array(range(len(y)))

|

||||

|

|

@ -386,8 +387,8 @@ def find_num_col(regions_without_seperators, multiplier=3.8):

|

|||

first_nonzero = first_nonzero + 200

|

||||

|

||||

peaks_neg = peaks_neg[(peaks_neg > first_nonzero) & (peaks_neg < last_nonzero)]

|

||||

peaks = peaks[(peaks > 0.06 * regions_without_seperators.shape[1]) & (peaks < 0.94 * regions_without_seperators.shape[1])]

|

||||

peaks_neg = peaks_neg[(peaks_neg > 370) & (peaks_neg < (regions_without_seperators.shape[1] - 370))]

|

||||

peaks = peaks[(peaks > 0.06 * regions_without_separators.shape[1]) & (peaks < 0.94 * regions_without_separators.shape[1])]

|

||||

peaks_neg = peaks_neg[(peaks_neg > 370) & (peaks_neg < (regions_without_separators.shape[1] - 370))]

|

||||

interest_pos = z[peaks]

|

||||

interest_pos = interest_pos[interest_pos > 10]

|

||||

# plt.plot(z)

|

||||

|

|

@ -517,22 +518,22 @@ def find_num_col(regions_without_seperators, multiplier=3.8):

|

|||

##print(len(peaks_neg_true))

|

||||

return len(peaks_neg_true), peaks_neg_true

|

||||

|

||||

def find_num_col_only_image(regions_without_seperators, multiplier=3.8):

|

||||

regions_without_seperators_0 = regions_without_seperators[:, :].sum(axis=0)

|

||||

def find_num_col_only_image(regions_without_separators, multiplier=3.8):

|

||||

regions_without_separators_0 = regions_without_separators[:, :].sum(axis=0)

|

||||

|

||||

##plt.plot(regions_without_seperators_0)

|

||||

##plt.plot(regions_without_separators_0)

|

||||

##plt.show()

|

||||

|

||||

sigma_ = 15

|

||||

|

||||

meda_n_updown = regions_without_seperators_0[len(regions_without_seperators_0) :: -1]

|

||||

meda_n_updown = regions_without_separators_0[len(regions_without_separators_0) :: -1]

|

||||

|

||||

first_nonzero = next((i for i, x in enumerate(regions_without_seperators_0) if x), 0)

|

||||

first_nonzero = next((i for i, x in enumerate(regions_without_separators_0) if x), 0)

|

||||

last_nonzero = next((i for i, x in enumerate(meda_n_updown) if x), 0)

|

||||

|

||||

last_nonzero = len(regions_without_seperators_0) - last_nonzero

|

||||

last_nonzero = len(regions_without_separators_0) - last_nonzero

|

||||

|

||||

y = regions_without_seperators_0 # [first_nonzero:last_nonzero]

|

||||

y = regions_without_separators_0 # [first_nonzero:last_nonzero]

|

||||

|

||||

y_help = np.zeros(len(y) + 20)

|

||||

|

||||

|

|

@ -558,9 +559,9 @@ def find_num_col_only_image(regions_without_seperators, multiplier=3.8):

|

|||

|

||||

peaks_neg = peaks_neg[(peaks_neg > first_nonzero) & (peaks_neg < last_nonzero)]

|

||||

|

||||

peaks = peaks[(peaks > 0.09 * regions_without_seperators.shape[1]) & (peaks < 0.91 * regions_without_seperators.shape[1])]

|

||||

peaks = peaks[(peaks > 0.09 * regions_without_separators.shape[1]) & (peaks < 0.91 * regions_without_separators.shape[1])]

|

||||

|

||||

peaks_neg = peaks_neg[(peaks_neg > 500) & (peaks_neg < (regions_without_seperators.shape[1] - 500))]

|

||||

peaks_neg = peaks_neg[(peaks_neg > 500) & (peaks_neg < (regions_without_separators.shape[1] - 500))]

|

||||

# print(peaks)

|

||||

interest_pos = z[peaks]

|

||||

|

||||

|

|

@ -703,31 +704,31 @@ def find_num_col_only_image(regions_without_seperators, multiplier=3.8):

|

|||

|

||||

return len(peaks_fin_true), peaks_fin_true

|

||||

|

||||

def find_num_col_by_vertical_lines(regions_without_seperators, multiplier=3.8):

|

||||

regions_without_seperators_0 = regions_without_seperators[:, :, 0].sum(axis=0)

|

||||

def find_num_col_by_vertical_lines(regions_without_separators, multiplier=3.8):

|

||||

regions_without_separators_0 = regions_without_separators[:, :, 0].sum(axis=0)

|

||||

|

||||

##plt.plot(regions_without_seperators_0)

|

||||

##plt.plot(regions_without_separators_0)

|

||||

##plt.show()

|

||||

|

||||

sigma_ = 35 # 70#35

|

||||

|

||||

z = gaussian_filter1d(regions_without_seperators_0, sigma_)

|

||||

z = gaussian_filter1d(regions_without_separators_0, sigma_)

|

||||

|

||||

peaks, _ = find_peaks(z, height=0)

|

||||

|

||||

# print(peaks,'peaksnew')

|

||||

return peaks

|

||||

|

||||

def return_regions_without_seperators(regions_pre):

|

||||

def return_regions_without_separators(regions_pre):

|

||||

kernel = np.ones((5, 5), np.uint8)

|

||||

regions_without_seperators = ((regions_pre[:, :] != 6) & (regions_pre[:, :] != 0)) * 1

|

||||

# regions_without_seperators=( (image_regions_eraly_p[:,:,:]!=6) & (image_regions_eraly_p[:,:,:]!=0) & (image_regions_eraly_p[:,:,:]!=5) & (image_regions_eraly_p[:,:,:]!=8) & (image_regions_eraly_p[:,:,:]!=7))*1

|

||||

regions_without_separators = ((regions_pre[:, :] != 6) & (regions_pre[:, :] != 0)) * 1

|

||||

# regions_without_separators=( (image_regions_eraly_p[:,:,:]!=6) & (image_regions_eraly_p[:,:,:]!=0) & (image_regions_eraly_p[:,:,:]!=5) & (image_regions_eraly_p[:,:,:]!=8) & (image_regions_eraly_p[:,:,:]!=7))*1

|

||||

|

||||

regions_without_seperators = regions_without_seperators.astype(np.uint8)

|

||||

regions_without_separators = regions_without_separators.astype(np.uint8)

|

||||

|

||||

regions_without_seperators = cv2.erode(regions_without_seperators, kernel, iterations=6)

|

||||

regions_without_separators = cv2.erode(regions_without_separators, kernel, iterations=6)

|

||||

|

||||

return regions_without_seperators

|

||||

return regions_without_separators

|

||||

|

||||

|

||||

def put_drop_out_from_only_drop_model(layout_no_patch, layout1):

|

||||

|

|

@ -783,7 +784,7 @@ def putt_bb_of_drop_capitals_of_model_in_patches_in_layout(layout_in_patch):

|

|||

return layout_in_patch

|

||||

|

||||

def check_any_text_region_in_model_one_is_main_or_header(regions_model_1,regions_model_full,contours_only_text_parent,all_box_coord,all_found_texline_polygons,slopes,contours_only_text_parent_d_ordered):

|

||||

cx_main,cy_main ,x_min_main , x_max_main, y_min_main ,y_max_main,y_corr_x_min_from_argmin=find_new_features_of_contoures(contours_only_text_parent)

|

||||

cx_main,cy_main ,x_min_main , x_max_main, y_min_main ,y_max_main,y_corr_x_min_from_argmin=find_new_features_of_contours(contours_only_text_parent)

|

||||

|

||||

length_con=x_max_main-x_min_main

|

||||

height_con=y_max_main-y_min_main

|

||||

|

|

@ -957,7 +958,7 @@ def small_textlines_to_parent_adherence2(textlines_con, textline_iamge, num_col)

|

|||

img_text2 = img_text2.astype(np.uint8)

|

||||

imgray = cv2.cvtColor(img_text2, cv2.COLOR_BGR2GRAY)

|

||||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

cont, hierachy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

|

||||

cont, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

|

||||

|

||||

# print(cont[0],type(cont))

|

||||

|

||||

|

|

@ -1187,7 +1188,7 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

imgray = cv2.cvtColor(img_p_in_ver, cv2.COLOR_BGR2GRAY)

|

||||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

|

||||

contours_lines_ver,hierachy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

contours_lines_ver,hierarchy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

|

||||

slope_lines_ver,dist_x_ver, x_min_main_ver ,x_max_main_ver ,cy_main_ver,slope_lines_org_ver,y_min_main_ver, y_max_main_ver, cx_main_ver=find_features_of_lines(contours_lines_ver)

|

||||

|

||||

|

|

@ -1201,7 +1202,7 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

imgray = cv2.cvtColor(img_in_hor, cv2.COLOR_BGR2GRAY)

|

||||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

|

||||

contours_lines_hor,hierachy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

contours_lines_hor,hierarchy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

|

||||

slope_lines_hor,dist_x_hor, x_min_main_hor ,x_max_main_hor ,cy_main_hor,slope_lines_org_hor,y_min_main_hor, y_max_main_hor, cx_main_hor=find_features_of_lines(contours_lines_hor)

|

||||

|

||||

|

|

@ -1219,7 +1220,7 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

#print(all_args_uniq,'all_args_uniq')

|

||||

if len(all_args_uniq)>0:

|

||||

if type(all_args_uniq[0]) is list:

|

||||

special_seperators=[]

|

||||

special_separators=[]

|

||||

contours_new=[]

|

||||

for dd in range(len(all_args_uniq)):

|

||||

merged_all=None

|

||||

|

|

@ -1228,7 +1229,7 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

some_x_min=x_min_main_hor[all_args_uniq[dd]]

|

||||

some_x_max=x_max_main_hor[all_args_uniq[dd]]

|

||||

|

||||

#img_in=np.zeros(seperators_closeup_n[:,:,2].shape)

|

||||

#img_in=np.zeros(separators_closeup_n[:,:,2].shape)

|

||||

#print(img_p_in_ver.shape[1],some_x_max-some_x_min,'xdiff')

|

||||

diff_x_some=some_x_max-some_x_min

|

||||

for jv in range(len(some_args)):

|

||||

|

|

@ -1245,14 +1246,14 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

if diff_max_min_uniques>sum_dis and ( (sum_dis/float(diff_max_min_uniques) ) >0.85 ) and ( (diff_max_min_uniques/float(img_p_in_ver.shape[1]))>0.85 ) and np.std( dist_x_hor[some_args] )<(0.55*np.mean( dist_x_hor[some_args] )):

|

||||

#print(dist_x_hor[some_args],dist_x_hor[some_args].sum(),np.min(x_min_main_hor[some_args]) ,np.max(x_max_main_hor[some_args]),'jalibdi')

|

||||

#print(np.mean( dist_x_hor[some_args] ),np.std( dist_x_hor[some_args] ),np.var( dist_x_hor[some_args] ),'jalibdiha')

|

||||

special_seperators.append(np.mean(cy_main_hor[some_args]))

|

||||

special_separators.append(np.mean(cy_main_hor[some_args]))

|

||||

|

||||

else:

|

||||

img_p_in=img_in_hor

|

||||

special_seperators=[]

|

||||

special_separators=[]

|

||||

else:

|

||||

img_p_in=img_in_hor

|

||||

special_seperators=[]

|

||||

special_separators=[]

|

||||

|

||||

|

||||

img_p_in_ver[:,:,0][img_p_in_ver[:,:,0]==255]=1

|

||||

|

|

@ -1267,7 +1268,7 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

contours_cross,_=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

|

||||

cx_cross,cy_cross ,_ , _, _ ,_,_=find_new_features_of_contoures(contours_cross)

|

||||

cx_cross,cy_cross ,_ , _, _ ,_,_=find_new_features_of_contours(contours_cross)

|

||||

|

||||

for ii in range(len(cx_cross)):

|

||||

img_p_in[int(cy_cross[ii])-30:int(cy_cross[ii])+30,int(cx_cross[ii])+5:int(cx_cross[ii])+40,0]=0

|

||||

|

|

@ -1275,8 +1276,8 @@ def combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(im

|

|||

|

||||

else:

|

||||

img_p_in=np.copy(img_in_hor)

|

||||

special_seperators=[]

|

||||

return img_p_in[:,:,0],special_seperators

|

||||

special_separators=[]

|

||||

return img_p_in[:,:,0],special_separators

|

||||

|

||||

def return_points_with_boundies(peaks_neg_fin, first_point, last_point):

|

||||

peaks_neg_tot = []

|

||||

|

|

@ -1288,45 +1289,45 @@ def return_points_with_boundies(peaks_neg_fin, first_point, last_point):

|

|||

|

||||

def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_lines, contours_h=None):

|

||||

|

||||

seperators_closeup=( (region_pre_p[:,:,:]==pixel_lines))*1

|

||||

separators_closeup=( (region_pre_p[:,:,:]==pixel_lines))*1

|

||||

|

||||

seperators_closeup[0:110,:,:]=0

|

||||

seperators_closeup[seperators_closeup.shape[0]-150:,:,:]=0

|

||||

separators_closeup[0:110,:,:]=0

|

||||

separators_closeup[separators_closeup.shape[0]-150:,:,:]=0

|

||||

|

||||

kernel = np.ones((5,5),np.uint8)

|

||||

|

||||

seperators_closeup=seperators_closeup.astype(np.uint8)

|

||||

seperators_closeup = cv2.dilate(seperators_closeup,kernel,iterations = 1)

|

||||

seperators_closeup = cv2.erode(seperators_closeup,kernel,iterations = 1)

|

||||

separators_closeup=separators_closeup.astype(np.uint8)

|

||||

separators_closeup = cv2.dilate(separators_closeup,kernel,iterations = 1)

|

||||

separators_closeup = cv2.erode(separators_closeup,kernel,iterations = 1)

|

||||

|

||||

|

||||

seperators_closeup_new=np.zeros((seperators_closeup.shape[0] ,seperators_closeup.shape[1] ))

|

||||

separators_closeup_new=np.zeros((separators_closeup.shape[0] ,separators_closeup.shape[1] ))

|

||||

|

||||

|

||||

|

||||

##_,seperators_closeup_n=self.combine_hor_lines_and_delete_cross_points_and_get_lines_features_back(region_pre_p[:,:,0])

|

||||

seperators_closeup_n=np.copy(seperators_closeup)

|

||||

##_,separators_closeup_n=self.combine_hor_lines_and_delete_cross_points_and_get_lines_features_back(region_pre_p[:,:,0])

|

||||

separators_closeup_n=np.copy(separators_closeup)

|

||||

|

||||

seperators_closeup_n=seperators_closeup_n.astype(np.uint8)

|

||||

##plt.imshow(seperators_closeup_n[:,:,0])

|

||||

separators_closeup_n=separators_closeup_n.astype(np.uint8)

|

||||

##plt.imshow(separators_closeup_n[:,:,0])

|

||||

##plt.show()

|

||||

|

||||

seperators_closeup_n_binary=np.zeros(( seperators_closeup_n.shape[0],seperators_closeup_n.shape[1]) )

|

||||

seperators_closeup_n_binary[:,:]=seperators_closeup_n[:,:,0]

|

||||

separators_closeup_n_binary=np.zeros(( separators_closeup_n.shape[0],separators_closeup_n.shape[1]) )

|

||||

separators_closeup_n_binary[:,:]=separators_closeup_n[:,:,0]

|

||||

|

||||

seperators_closeup_n_binary[:,:][seperators_closeup_n_binary[:,:]!=0]=1

|

||||

#seperators_closeup_n_binary[:,:][seperators_closeup_n_binary[:,:]==0]=255

|

||||

#seperators_closeup_n_binary[:,:][seperators_closeup_n_binary[:,:]==-255]=0

|

||||

separators_closeup_n_binary[:,:][separators_closeup_n_binary[:,:]!=0]=1

|

||||

#separators_closeup_n_binary[:,:][separators_closeup_n_binary[:,:]==0]=255

|

||||

#separators_closeup_n_binary[:,:][separators_closeup_n_binary[:,:]==-255]=0

|

||||

|

||||

|

||||

#seperators_closeup_n_binary=(seperators_closeup_n_binary[:,:]==2)*1

|

||||

#separators_closeup_n_binary=(separators_closeup_n_binary[:,:]==2)*1

|

||||

|

||||

#gray = cv2.cvtColor(seperators_closeup_n, cv2.COLOR_BGR2GRAY)

|

||||

#gray = cv2.cvtColor(separators_closeup_n, cv2.COLOR_BGR2GRAY)

|

||||

|

||||

###

|

||||

|

||||

#print(seperators_closeup_n_binary.shape)

|

||||

gray_early=np.repeat(seperators_closeup_n_binary[:, :, np.newaxis], 3, axis=2)

|

||||

#print(separators_closeup_n_binary.shape)

|

||||

gray_early=np.repeat(separators_closeup_n_binary[:, :, np.newaxis], 3, axis=2)

|

||||

gray_early=gray_early.astype(np.uint8)

|

||||

|

||||

#print(gray_early.shape,'burda')

|

||||

|

|

@ -1335,7 +1336,7 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

ret_e, thresh_e = cv2.threshold(imgray_e, 0, 255, 0)

|

||||

|

||||

#print('burda3')

|

||||

contours_line_e,hierachy_e=cv2.findContours(thresh_e,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

contours_line_e,hierarchy_e=cv2.findContours(thresh_e,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

|

||||

#slope_lines_e,dist_x_e, x_min_main_e ,x_max_main_e ,cy_main_e,slope_lines_org_e,y_min_main_e, y_max_main_e, cx_main_e=self.find_features_of_lines(contours_line_e)

|

||||

|

||||

|

|

@ -1364,9 +1365,9 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

|

||||

###

|

||||

|

||||

seperators_closeup_n_binary=cv2.fillPoly(seperators_closeup_n_binary,pts=cnts_hor_e,color=(0,0,0))

|

||||

separators_closeup_n_binary=cv2.fillPoly(separators_closeup_n_binary,pts=cnts_hor_e,color=(0,0,0))

|

||||

|

||||

gray = cv2.bitwise_not(seperators_closeup_n_binary)

|

||||

gray = cv2.bitwise_not(separators_closeup_n_binary)

|

||||

gray=gray.astype(np.uint8)

|

||||

|

||||

|

||||

|

|

@ -1418,18 +1419,18 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

vertical = cv2.dilate(vertical,kernel,iterations = 1)

|

||||

# Show extracted vertical lines

|

||||

|

||||

horizontal,special_seperators=combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(vertical,horizontal,num_col_classifier)

|

||||

horizontal,special_separators=combine_hor_lines_and_delete_cross_points_and_get_lines_features_back_new(vertical,horizontal,num_col_classifier)

|

||||

|

||||

|

||||

#plt.imshow(horizontal)

|

||||

#plt.show()

|

||||

#print(vertical.shape,np.unique(vertical),'verticalvertical')

|

||||

seperators_closeup_new[:,:][vertical[:,:]!=0]=1

|

||||

seperators_closeup_new[:,:][horizontal[:,:]!=0]=1

|

||||

separators_closeup_new[:,:][vertical[:,:]!=0]=1

|

||||

separators_closeup_new[:,:][horizontal[:,:]!=0]=1

|

||||

|

||||

##plt.imshow(seperators_closeup_new)

|

||||

##plt.imshow(separators_closeup_new)

|

||||

##plt.show()

|

||||

##seperators_closeup_n

|

||||

##separators_closeup_n

|

||||

vertical=np.repeat(vertical[:, :, np.newaxis], 3, axis=2)

|

||||

vertical=vertical.astype(np.uint8)

|

||||

|

||||

|

|

@ -1442,7 +1443,7 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

imgray = cv2.cvtColor(vertical, cv2.COLOR_BGR2GRAY)

|

||||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

|

||||

contours_line_vers,hierachy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

contours_line_vers,hierarchy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

slope_lines,dist_x, x_min_main ,x_max_main ,cy_main,slope_lines_org,y_min_main, y_max_main, cx_main=find_features_of_lines(contours_line_vers)

|

||||

#print(slope_lines,'vertical')

|

||||

args=np.array( range(len(slope_lines) ))

|

||||

|

|

@ -1454,7 +1455,7 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

x_max_main_ver=x_max_main[slope_lines==1]

|

||||

cx_main_ver=cx_main[slope_lines==1]

|

||||

dist_y_ver=y_max_main_ver-y_min_main_ver

|

||||

len_y=seperators_closeup.shape[0]/3.0

|

||||

len_y=separators_closeup.shape[0]/3.0

|

||||

|

||||

|

||||

#plt.imshow(horizontal)

|

||||

|

|

@ -1465,12 +1466,12 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

imgray = cv2.cvtColor(horizontal, cv2.COLOR_BGR2GRAY)

|

||||

ret, thresh = cv2.threshold(imgray, 0, 255, 0)

|

||||

|

||||

contours_line_hors,hierachy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

contours_line_hors,hierarchy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

|

||||

slope_lines,dist_x, x_min_main ,x_max_main ,cy_main,slope_lines_org,y_min_main, y_max_main, cx_main=find_features_of_lines(contours_line_hors)

|

||||

|

||||

slope_lines_org_hor=slope_lines_org[slope_lines==0]

|

||||

args=np.array( range(len(slope_lines) ))

|

||||

len_x=seperators_closeup.shape[1]/5.0

|

||||

len_x=separators_closeup.shape[1]/5.0

|

||||

|

||||

dist_y=np.abs(y_max_main-y_min_main)

|

||||

|

||||

|

|

@ -1549,56 +1550,56 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

matrix_of_lines_ch=np.copy(matrix_l_n)

|

||||

|

||||

|

||||

cy_main_spliters=cy_main_hor[ (x_min_main_hor<=.16*region_pre_p.shape[1]) & (x_max_main_hor>=.84*region_pre_p.shape[1] )]

|

||||

cy_main_splitters=cy_main_hor[ (x_min_main_hor<=.16*region_pre_p.shape[1]) & (x_max_main_hor>=.84*region_pre_p.shape[1] )]

|

||||

|

||||

cy_main_spliters=np.array( list(cy_main_spliters)+list(special_seperators))

|

||||

cy_main_splitters=np.array( list(cy_main_splitters)+list(special_separators))

|

||||

|

||||

if contours_h is not None:

|

||||

try:

|

||||

cy_main_spliters_head=cy_main_head[ (x_min_main_head<=.16*region_pre_p.shape[1]) & (x_max_main_head>=.84*region_pre_p.shape[1] )]

|

||||

cy_main_spliters=np.array( list(cy_main_spliters)+list(cy_main_spliters_head))

|

||||

cy_main_splitters_head=cy_main_head[ (x_min_main_head<=.16*region_pre_p.shape[1]) & (x_max_main_head>=.84*region_pre_p.shape[1] )]

|

||||

cy_main_splitters=np.array( list(cy_main_splitters)+list(cy_main_splitters_head))

|

||||

except:

|

||||

pass

|

||||

args_cy_spliter=np.argsort(cy_main_spliters)

|

||||

args_cy_splitter=np.argsort(cy_main_splitters)

|

||||

|

||||

cy_main_spliters_sort=cy_main_spliters[args_cy_spliter]

|

||||

cy_main_splitters_sort=cy_main_splitters[args_cy_splitter]

|

||||

|

||||

spliter_y_new=[]

|

||||

spliter_y_new.append(0)

|

||||

for i in range(len(cy_main_spliters_sort)):

|

||||

spliter_y_new.append( cy_main_spliters_sort[i] )

|

||||

splitter_y_new=[]

|

||||

splitter_y_new.append(0)

|

||||

for i in range(len(cy_main_splitters_sort)):

|

||||

splitter_y_new.append( cy_main_splitters_sort[i] )

|

||||

|

||||

spliter_y_new.append(region_pre_p.shape[0])

|

||||

splitter_y_new.append(region_pre_p.shape[0])

|

||||

|

||||

spliter_y_new_diff=np.diff(spliter_y_new)/float(region_pre_p.shape[0])*100

|

||||

splitter_y_new_diff=np.diff(splitter_y_new)/float(region_pre_p.shape[0])*100

|

||||

|

||||

args_big_parts=np.array(range(len(spliter_y_new_diff))) [ spliter_y_new_diff>22 ]

|

||||

args_big_parts=np.array(range(len(splitter_y_new_diff))) [ splitter_y_new_diff>22 ]

|

||||

|

||||

|

||||

|

||||

regions_without_seperators=return_regions_without_seperators(region_pre_p)

|

||||

regions_without_separators=return_regions_without_separators(region_pre_p)

|

||||

|

||||

|

||||

length_y_threshold=regions_without_seperators.shape[0]/4.0

|

||||

length_y_threshold=regions_without_separators.shape[0]/4.0

|

||||

|

||||

num_col_fin=0

|

||||

peaks_neg_fin_fin=[]

|

||||

|

||||

for iteils in args_big_parts:

|

||||

for itiles in args_big_parts:

|

||||

|

||||

|

||||

regions_without_seperators_teil=regions_without_seperators[int(spliter_y_new[iteils]):int(spliter_y_new[iteils+1]),:,0]

|

||||

#image_page_background_zero_teil=image_page_background_zero[int(spliter_y_new[iteils]):int(spliter_y_new[iteils+1]),:]

|

||||

regions_without_separators_tile=regions_without_separators[int(splitter_y_new[itiles]):int(splitter_y_new[itiles+1]),:,0]

|

||||

#image_page_background_zero_tile=image_page_background_zero[int(splitter_y_new[itiles]):int(splitter_y_new[itiles+1]),:]

|

||||

|

||||

#print(regions_without_seperators_teil.shape)

|

||||

##plt.imshow(regions_without_seperators_teil)

|

||||

#print(regions_without_separators_tile.shape)

|

||||

##plt.imshow(regions_without_separators_tile)

|

||||

##plt.show()

|

||||

|

||||

#num_col, peaks_neg_fin=self.find_num_col(regions_without_seperators_teil,multiplier=6.0)

|

||||

#num_col, peaks_neg_fin=self.find_num_col(regions_without_separators_tile,multiplier=6.0)

|

||||

|

||||

#regions_without_seperators_teil=cv2.erode(regions_without_seperators_teil,kernel,iterations = 3)

|

||||

#regions_without_separators_tile=cv2.erode(regions_without_separators_tile,kernel,iterations = 3)

|

||||

#

|

||||

num_col, peaks_neg_fin=find_num_col(regions_without_seperators_teil,multiplier=7.0)

|

||||

num_col, peaks_neg_fin=find_num_col(regions_without_separators_tile,multiplier=7.0)

|

||||

|

||||

if num_col>num_col_fin:

|

||||

num_col_fin=num_col

|

||||

|

|

@ -1614,25 +1615,25 @@ def find_number_of_columns_in_document(region_pre_p, num_col_classifier, pixel_l

|

|||

#print(peaks_neg_fin_fin,'peaks_neg_fin_fintaza')

|

||||

|

||||

|

||||

return num_col_fin, peaks_neg_fin_fin,matrix_of_lines_ch,spliter_y_new,seperators_closeup_n

|

||||

return num_col_fin, peaks_neg_fin_fin,matrix_of_lines_ch,splitter_y_new,separators_closeup_n

|

||||

|

||||

|

||||

def return_boxes_of_images_by_order_of_reading_new(spliter_y_new, regions_without_seperators, matrix_of_lines_ch, num_col_classifier):

|

||||

def return_boxes_of_images_by_order_of_reading_new(splitter_y_new, regions_without_separators, matrix_of_lines_ch, num_col_classifier):

|

||||