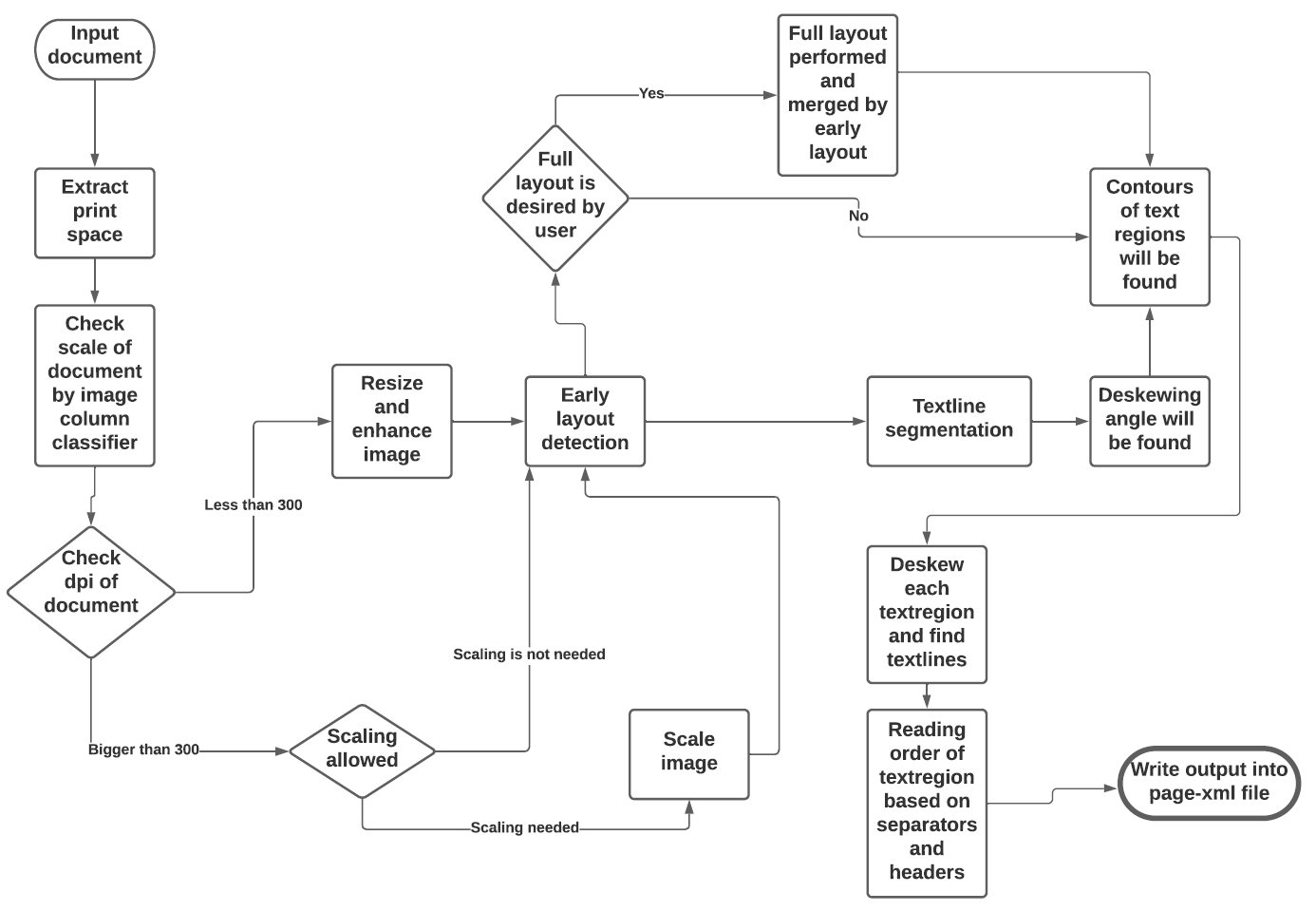

From 41d6ddeae8834718c53af61c35a496e4dd27ad69 Mon Sep 17 00:00:00 2001 From: Clemens Neudecker <952378+cneud@users.noreply.github.com> Date: Mon, 30 Nov 2020 15:12:19 +0100 Subject: [PATCH] add flowchart --- README.md | 4 +++- 1 file changed, 3 insertions(+), 1 deletion(-) diff --git a/README.md b/README.md index 8c16468..3a500ca 100644 --- a/README.md +++ b/README.md @@ -18,7 +18,7 @@ It can currently detect the following layout classes/elements: In addition, the tool can be used to detect the _Reading Order_ of regions. The final goal is to feed the output to an OCR model. -The tool uses a combination of various models and heuristics: +The tool uses a combination of various models and heuristics (see flowchart below for the different stages and how they interact): * [Border detection](https://github.com/qurator-spk/eynollah#border-detection) * [Layout detection](https://github.com/qurator-spk/eynollah#layout-detection) * [Textline detection](https://github.com/qurator-spk/eynollah#textline-detection) @@ -28,6 +28,8 @@ The tool uses a combination of various models and heuristics: The first three stages are based on [pixelwise segmentation](https://github.com/qurator-spk/sbb_pixelwise_segmentation). + + ## Border detection For the purpose of text recognition (OCR) and in order to avoid noise being introduced from texts outside the printspace, one first needs to detect the border of the printed frame. This is done by a binary pixelwise-segmentation model trained on a dataset of 2,000 documents where about 1,200 of them come from the [dhSegment](https://github.com/dhlab-epfl/dhSegment/) project (you can download the dataset from [here](https://github.com/dhlab-epfl/dhSegment/releases/download/v0.2/pages.zip)) and the remainder having been annotated in SBB. For border detection, the model needs to be fed with the whole image at once rather than separated in patches.